[ad_1]

TOPLINE:

Large language models (LLMs) perform differently in answering rheumatology questions, with ChatGPT-4 demonstrating higher accuracy and quality than Gemini Advanced and Claude 3 Opus. However, more than 70% of incorrect answers by all three had the potential to cause harm.

METHODOLOGY:

- Researchers evaluated the accuracy, quality, and safety of three LLMs, namely, Gemini Advanced, Claude 3 Opus, and ChatGPT-4, using questions from the 2022 Continuous Assessment and Review Evaluation (CARE) question bank of the American College of Rheumatology.

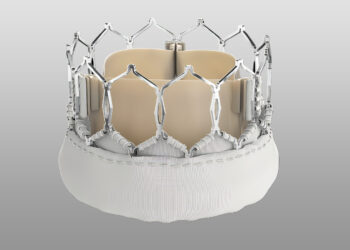

- They used 40 questions, of which 30 were randomly selected and 10 required image assessment.

- Five board-certified rheumatologists from various countries independently rated all the answers provided by the LLMs.

- Accuracy was evaluated by comparing each LLM’s answers with the correct answers provided by the question bank.

- Quality was evaluated using a framework that assesses the following domains: Scientific consensus, comprehension, retrieval, reasoning, inappropriate content, and missing content, while safety was evaluated by assessing potential harm.

TAKEAWAY:

- ChatGPT-4 achieved the highest accuracy at 78%, outperforming Claude 3 Opus (63%) and Gemini Advanced (53%), with 70% being the passing threshold for the CARE question bank.

- For image-containing questions, ChatGPT-4 and Claude 3 Opus each achieved 80% accuracy, while Gemini Advanced achieved 30% accuracy.

- ChatGPT-4 produced answers that were generally higher in quality. It outperformed Claude 3 Opus in scientific consensus (P P = .0074), and missing content (P = .011) and surpassed Gemini Advanced in all quality-related domains (P

- Claude 3 Opus produced the highest proportion of potentially harmful answers at 28%, followed by Gemini Advanced at 15% and ChatGPT-4 at 13%.

IN PRACTICE:

“Our findings suggest that ChatGPT-4 is currently the more accurate and reliable LLM for rheumatology, aligning well with current scientific consensus and including fewer inappropriate or missing content elements,” the authors wrote. “Both patients and clinicians should be aware that LLMs can provide highly convincing but potentially harmful answers. Continuous evaluation of LLMs is essential for their safe clinical application, especially in complex fields such as rheumatology,” they added.

SOURCE:

This study was led by Jaime Flores-Gouyonnet, Mayo Clinic, Rochester, Minnesota. It was published online on January 22, 2025, in The Lancet Rheumatology.

LIMITATIONS:

The use of questions from a single question bank may limit the generalizability of the findings to other sources or real-world clinical scenarios. The evaluation framework was adapted from a tool for generative artificial intelligence and was not specifically validated for the assessment of LLMs. With the rapid evolution of LLMs, performance differences will probably change over time.

DISCLOSURES:

One author was supported by the Rheumatology Research Foundation Investigator Award, the Lupus Research Alliance Diversity in Lupus Research Award, the Centers for Disease Control and Prevention, and the Mayo Clinic. The authors declared no conflicts of interest.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication.

[ad_2]

Source link : https://www.medscape.com/viewarticle/artificial-intelligence-large-language-models-not-so-great-2025a10001xj?src=rss

Author :

Publish date : 2025-01-27 08:38:20

Copyright for syndicated content belongs to the linked Source.