[ad_1]

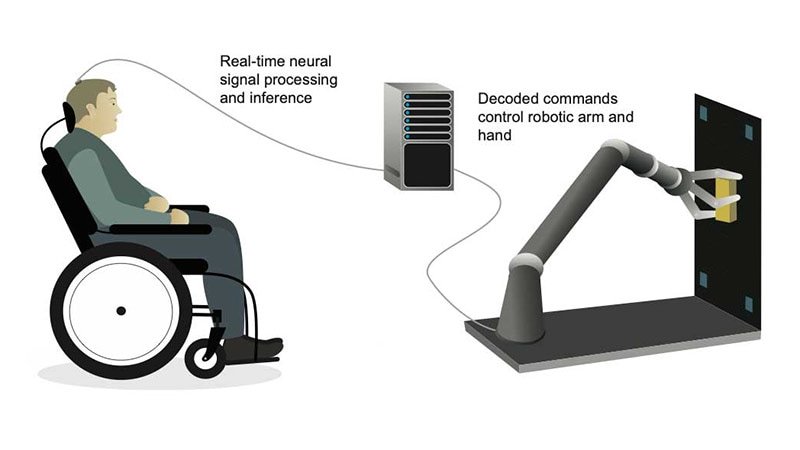

A new brain-computer interface (BCI) powered by artificial intelligence (AI) allowed a paralyzed man, who could not speak or move, to control a robotic arm to grasp and move objects simply by imagining himself performing these movements.

Notably, the BCI worked for 7 months without needing to be adjusted, compared with just a day or two for other devices.

In an interview with Medscape Medical News, neurologist Karunesh Ganguly, MD, PhD, with University of California San Francisco (UCSF) Weill Institute for Neurosciences, explained that older BCI systems use spike recordings from tiny electrodes implanted in brain tissue to record signals from single or small groups of neurons near the electrode. However, these signals are unstable due to brain movement.

The new BCI system uses surface recordings, which are less invasive and more stable.

“This approach is more on the surface of the brain itself. It’s still invasive, requires surgery, but it’s able to record signals more stably,” Ganguly said.

In addition, the AI component of the BCI tracks learning “drift” over time. Initially, the system needs about 8 to 9 days to stabilize, after which the system maintains stability for up to 7 months before requiring a brief recalibration, Ganguly noted.

The study was published online March 6 in the journal Cell.

Next Generation of Brain-Computer Interfaces

To test the AI-powered BCI, the investigators worked with a man who had been paralyzed by a stroke several years earlier. He was unable to speak or move.

Electrodes implanted on the surface of his brain picked up brain activity when he imagined moving different parts of his body and this data was used to train the AI.

With practice, the man could make the robotic arm pick up blocks, turn them, and move them to new locations. He was also able to open a cabinet, take out a cup, and hold it up to a water dispenser.

Seven months later, the participant was still able to control the robotic arm after a 15-minute “tune-up” to adjust for how his movement representations had drifted since he had begun using the device.

Other AI-driven BCIs may require daily retraining because the brain signals shift. This new system reduces that burden.

“It’s the difference between, I would say, like trying to re-learn a bike daily, versus having a bike that you learned over a week or two, and then you’re ready to go from then on,” said Ganguly.

The researchers are now refining the AI models to make the robotic arm move faster and more smoothly. The goal is for the system to be usable in daily life and the community without supervision.

“This blending of learning between humans and AI is the next phase for these brain-computer interfaces. It’s what we need to achieve sophisticated, lifelike function,” Ganguly said in a news release.

This research was funded by the National Institutes of Health and the UCSF Weill Institute for Neurosciences.

[ad_2]

Source link : https://www.medscape.com/viewarticle/ai-powered-interface-lets-man-control-robotic-arm-thought-2025a100069b?src=rss

Author :

Publish date : 2025-03-14 18:38:00

Copyright for syndicated content belongs to the linked Source.