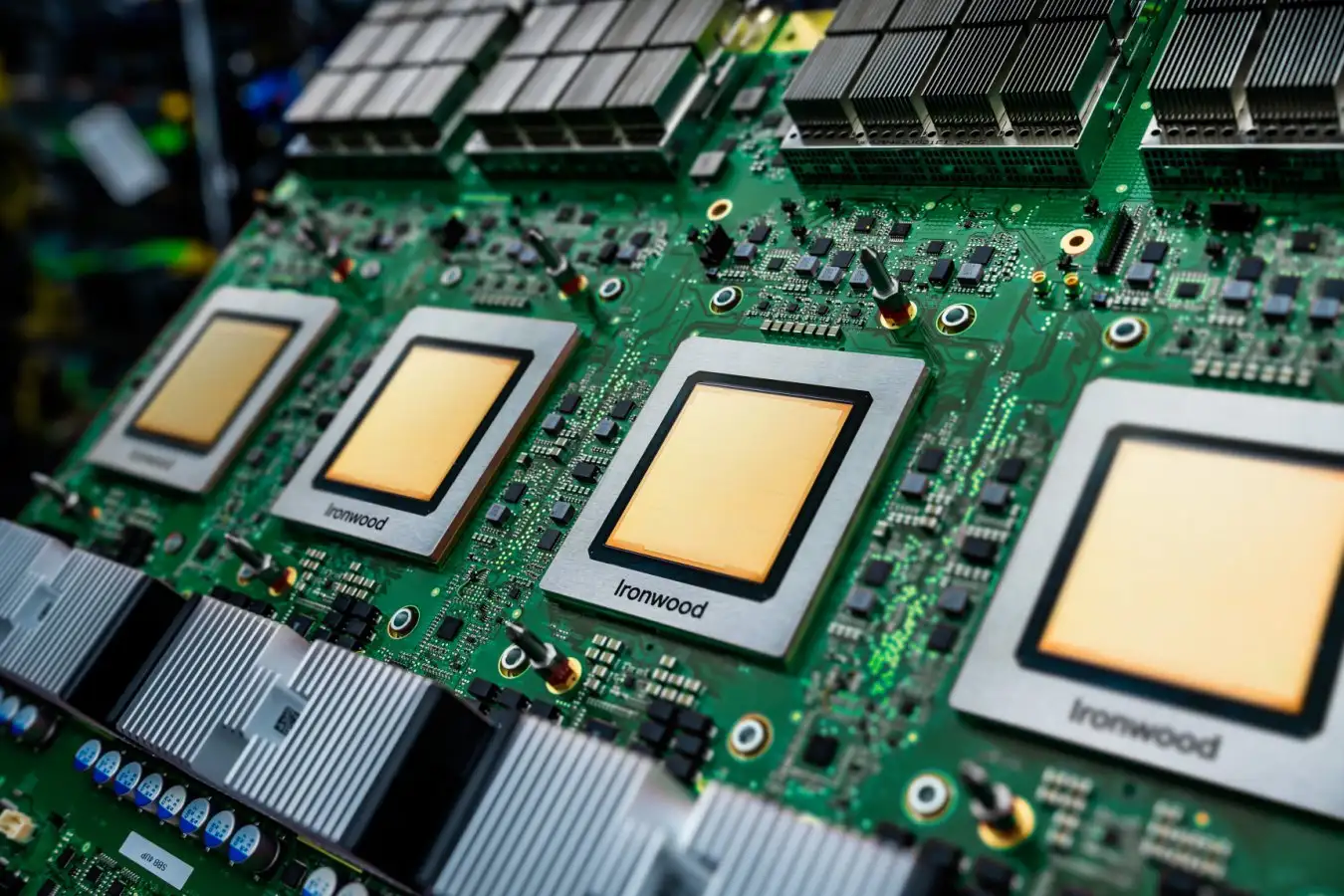

Ironwood is Google’s latest tensor processing unit

Nvidia’s position as the dominant supplier of AI chips may be under threat from a specialised chip pioneered by Google, with reports suggesting companies like Meta and Anthropic are looking to spend billions on Google’s tensor processing units.

What is a TPU?

The success of the artificial intelligence industry has been in large part based on graphical processing units (GPUs), a kind of computer chip that can perform many parallel calculations at the same time, rather than one after the other like the computer processing units (CPUs) that power most computers.

GPUs were originally developed to assist with computer graphics, as the name suggests, and gaming. “If I have a lot of pixels in a space and I need to do a rotation of this to calculate a new camera view, this is an operation that can be done in parallel, for many different pixels,” says Francesco Conti at the University of Bologna in Italy.

This ability to do calculations in parallel happened to be useful for training and running AI models, which often use calculations involving vast grids of numbers performed at the same time, called matrix multiplication. “GPUs are a very general architecture, but they are extremely suited to applications that show a high degree of parallelism,” says Conti.

However, because they weren’t originally designed with AI in mind, there can be inefficiencies in the ways that GPUs translate the calculations that are performed on the chips. Tensor processing units (TPUs), which were originally developed by Google in 2016, are instead designed solely around matrix multiplication, says Conti, which are the main calculations needed for training and running large AI models.

This year, Google released the seventh generation of its TPU, called Ironwood, which powers many of the company’s AI models like Gemini and protein-modelling AlphaFold.

Are TPUs much better than GPUs for AI?

Technologically, TPUs are more of a subset of GPUs than an entirely different chip, says Simon McIntosh-Smith at the University of Bristol, UK. “They focus on the bits that GPUs do more specifically aimed at training and inference for AI, but actually they’re in some ways more similar to GPUs than you might think.” But because TPUs are designed with certain AI applications in mind, they can be much more efficient for these jobs and save potentially tens or hundreds of millions of dollars, he says.

However, this specialisation also has its disadvantages and can make TPUs inflexible if the AI models change significantly between generations, says Conti. “If you don’t have the flexibility on your [TPU], you have to do [calculations] on the CPU of your node in the data centre, and this will slow you down immensely,” says Conti.

One advantage that Nvidia GPUs have traditionally held is that there is simple software available that can help AI designers run their code on Nvidia chips. This didn’t exist in the same way for TPUs when they first came about, but the chips are now at a stage where they are more straightforward to use, says Conti. “With the TPU, you can now do the same [as GPUs],” he says. “Now that you have enabled that, it’s clear that the availability becomes a major factor.”

Who is building TPUs?

Although Google first launched the TPU, many of the largest AI companies (known as hyperscalers), as well as smaller start-ups, have now started developing their own specialised TPUs, including Amazon, which uses its own Trainium chips to train its AI models.

“Most of the hyperscalers have their own internal programmes, and that’s partly because GPUs got so expensive because the demand was outstripping supply, and it might be cheaper to design and build your own,” says McIntosh-Smith.

How will TPUs affect the AI industry?

Google has been developing its TPUs for over a decade, but it has mostly been using these chips for its own AI models. What appears to be changing now is that other large companies, like Meta and Anthropic, are making sizeable purchases of computing power from Google’s TPUs. “What we haven’t heard about is big customers switching, and maybe that’s what’s starting to happen now,” says McIntosh-Smith. “They’ve matured enough and there’s enough of them.”

As well as creating more choice for the large companies, it could make good financial sense for them to diversify, he says. “It might even be that that means you get a better deal from Nvidia in the future,” he says.

Topics:

Source link : https://www.newscientist.com/article/2506354-why-googles-custom-ai-chips-are-shaking-up-the-tech-industry/?utm_campaign=RSS%7CNSNS&utm_source=NSNS&utm_medium=RSS&utm_content=home

Author :

Publish date : 2025-11-28 16:00:00

Copyright for syndicated content belongs to the linked Source.